Confidential pharma campaign — AI-assisted optical reconstruction

A global pharma campaign (delivered via Prodigious, London) reached me after principal photography revealed a serious optical defect. Around 70–80% of the footage was too soft or distorted to use. I was asked to save the campaign if possible, and do it without leaving visible VFX fingerprints.

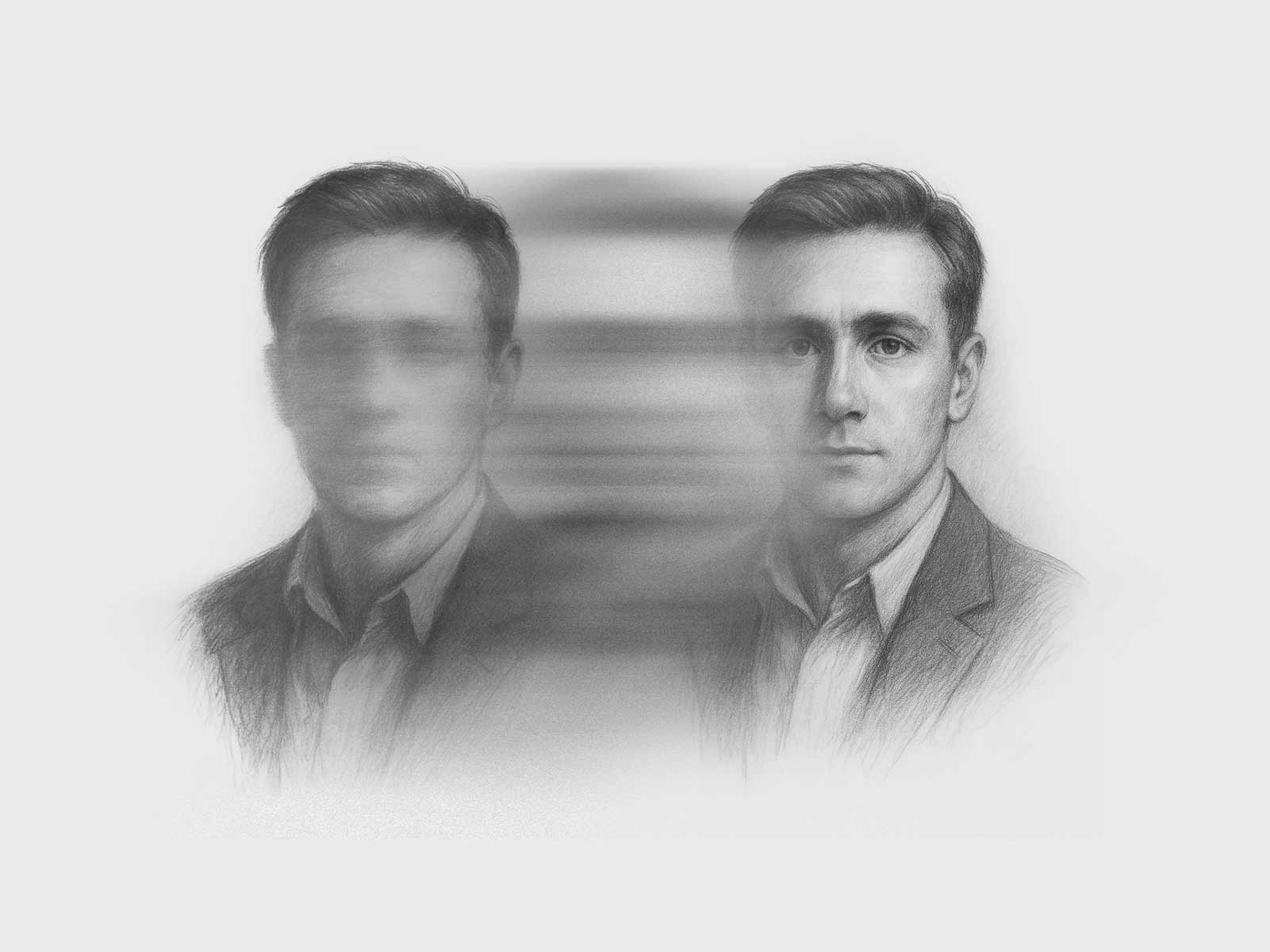

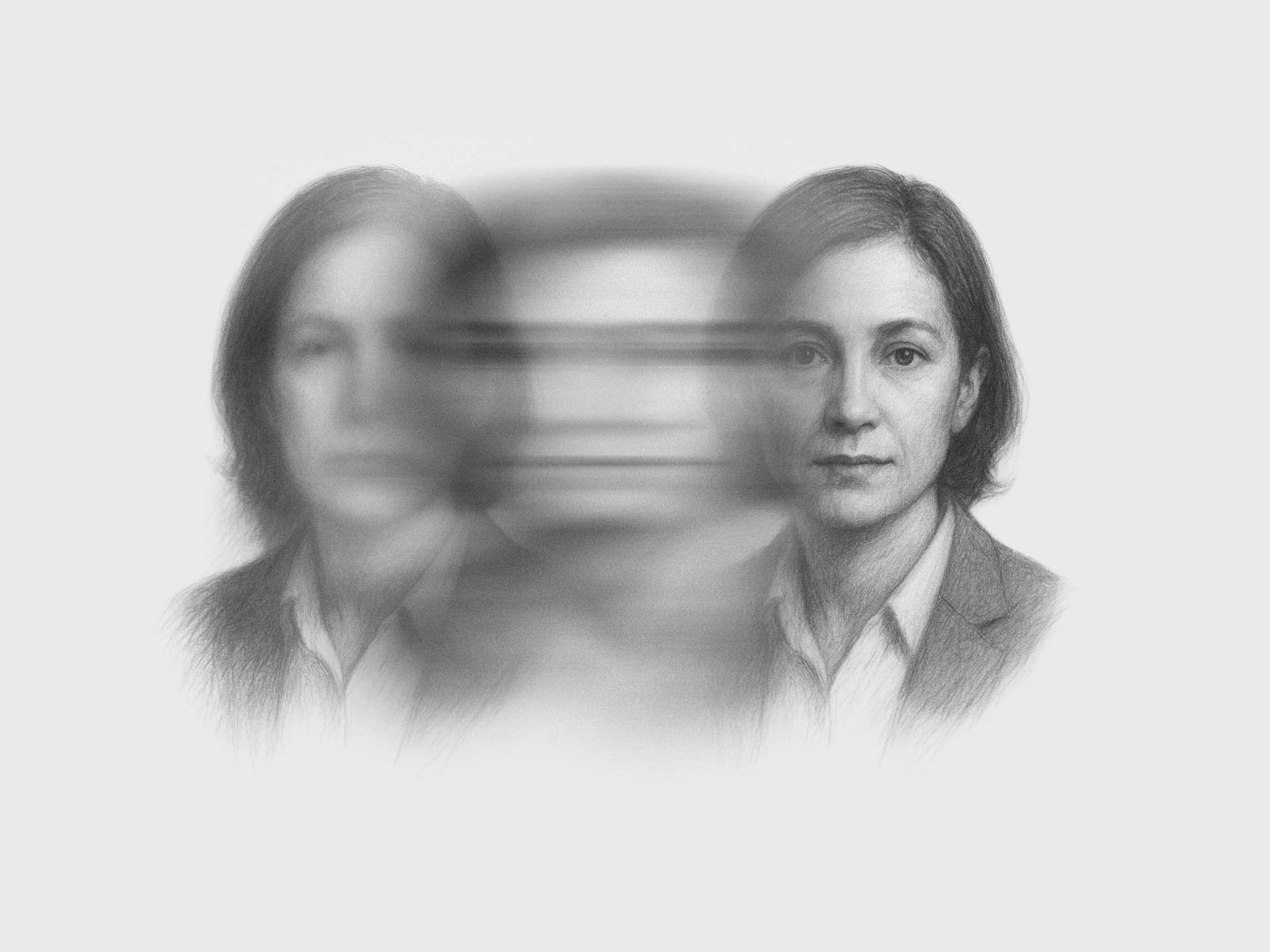

I built a hybrid workflow: classic VFX for tracking, blending and cleanup, combined with a custom-trained AI diffusion model to reconstruct lost facial and texture detail of the talent. The model wasn’t used to invent new looks but was constrained to preserve identity and natural skin. Each shot ran through an iterative feedback loop multiple times to gradually build up entirely synthetic yet imperceptible genuine detail. When the AI added detail, I balanced it with a human touch so the image read as optically clean, not “processed”.

The result was a full salvage of the core plates. Editors could cut the campaign as intended, and the final pictures looked like they’d been shot optically perfectly in-camera.

Because of NDAs, I can’t name the brand or show production frames. The images shown here are visual representations of the process: taking optically compromised material and synthesising back the missing detail until the result reads as naturally sharp and consistent in motion.

I built a hybrid workflow: classic VFX for tracking, blending and cleanup, combined with a custom-trained AI diffusion model to reconstruct lost facial and texture detail of the talent. The model wasn’t used to invent new looks but was constrained to preserve identity and natural skin. Each shot ran through an iterative feedback loop multiple times to gradually build up entirely synthetic yet imperceptible genuine detail. When the AI added detail, I balanced it with a human touch so the image read as optically clean, not “processed”.

The result was a full salvage of the core plates. Editors could cut the campaign as intended, and the final pictures looked like they’d been shot optically perfectly in-camera.

Because of NDAs, I can’t name the brand or show production frames. The images shown here are visual representations of the process: taking optically compromised material and synthesising back the missing detail until the result reads as naturally sharp and consistent in motion.